Build OpenCV from source with CUDA for GPU access on Windows | by Ankit Kumar Singh | Analytics Vidhya | Medium

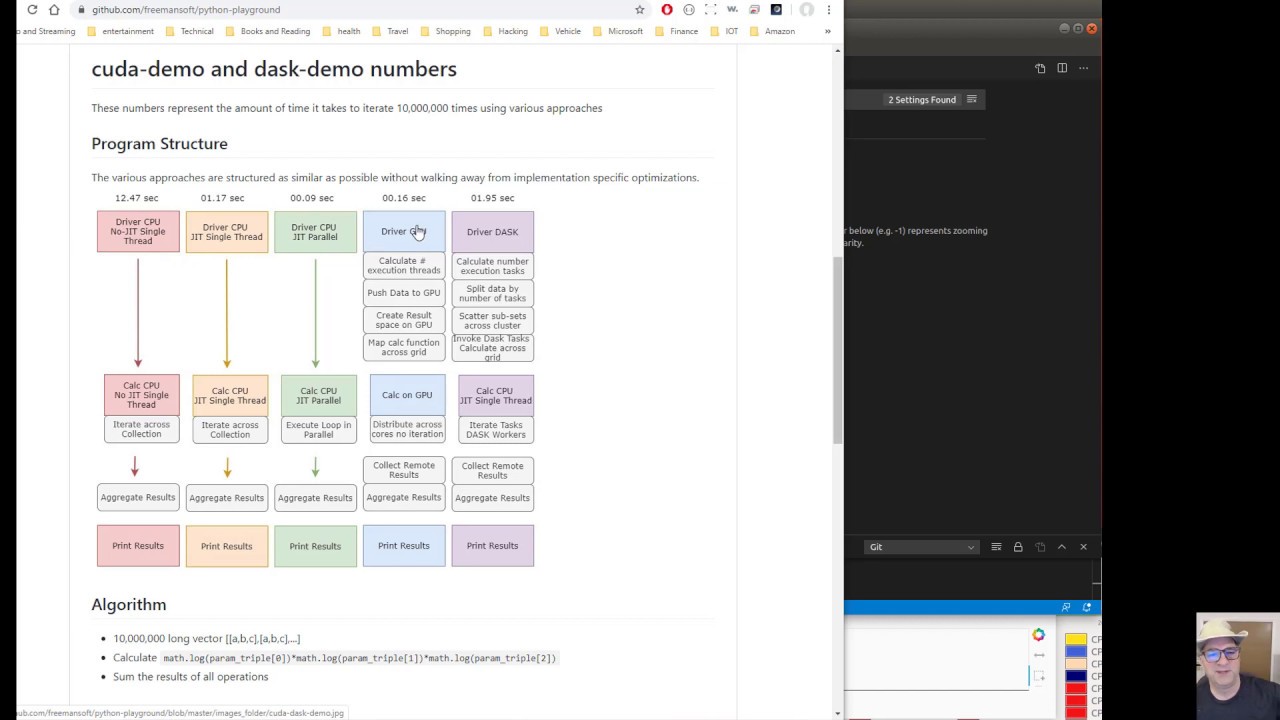

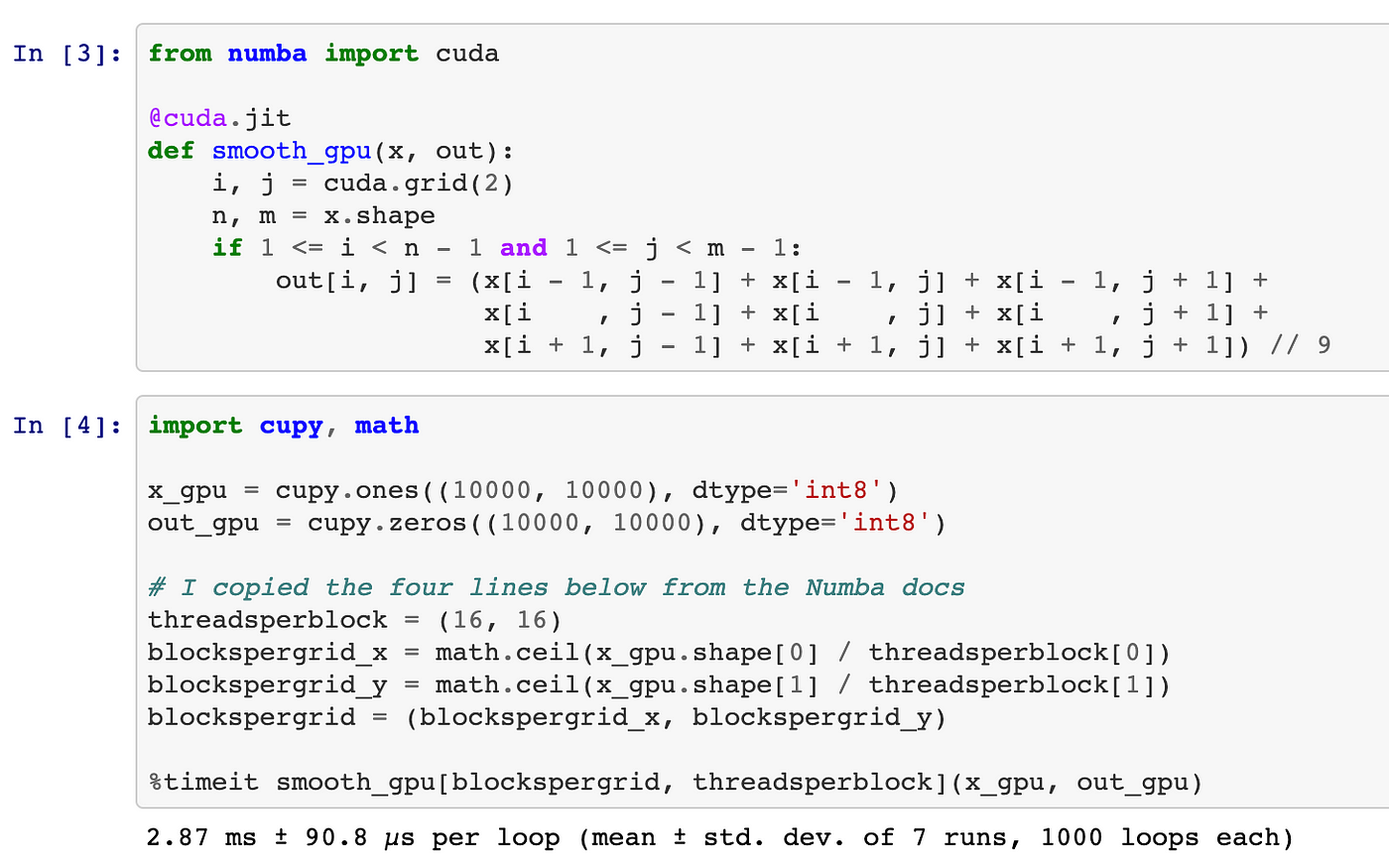

Python, Performance, and GPUs. A status update for using GPU… | by Matthew Rocklin | Towards Data Science

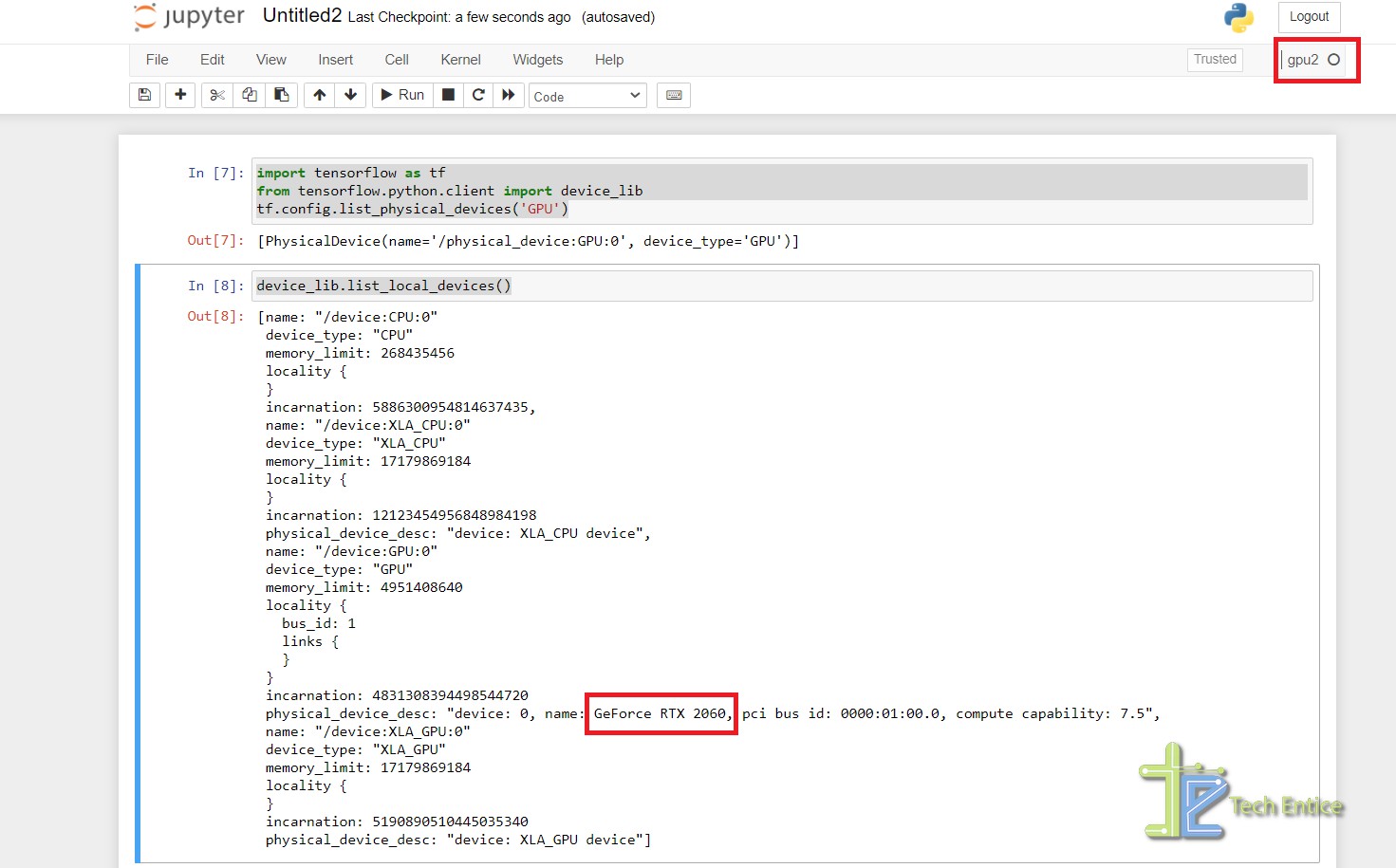

Why is the Python code not implementing on GPU? Tensorflow-gpu, CUDA, CUDANN installed - Stack Overflow

Python, Performance, and GPUs. A status update for using GPU… | by Matthew Rocklin | Towards Data Science

Hands-On GPU Programming with Python and CUDA: Explore high-performance parallel computing with CUDA: Tuomanen, Dr. Brian: 9781788993913: Books - Amazon

tensorflow - GPU utilization is N/A when using nvidia-smi for GeForce GTX 1650 graphic card - Stack Overflow

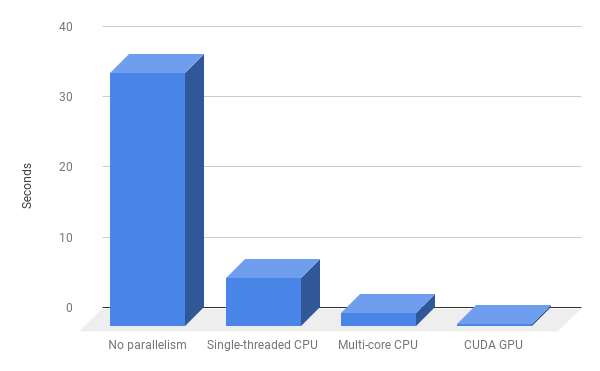

How GPU Computing literally saved me at work? | by Abhishek Mungoli | Walmart Global Tech Blog | Medium

How to build and install TensorFlow GPU/CPU for Windows from source code using bazel and Python 3.6 | by Aleksandr Sokolovskii | Medium